Imagine sitting down at a fancy restaurant and tearing into a 16-ounce steak with your teeth instead of cutting it. This would be a pretty frustrating experience

As with steak, software becomes much easier to manage if it is divided into smaller chunks before eating or rather using it. This approach is actually used by lots of larger services today; where they split whatever it is they’re providing into lots of small micro services.

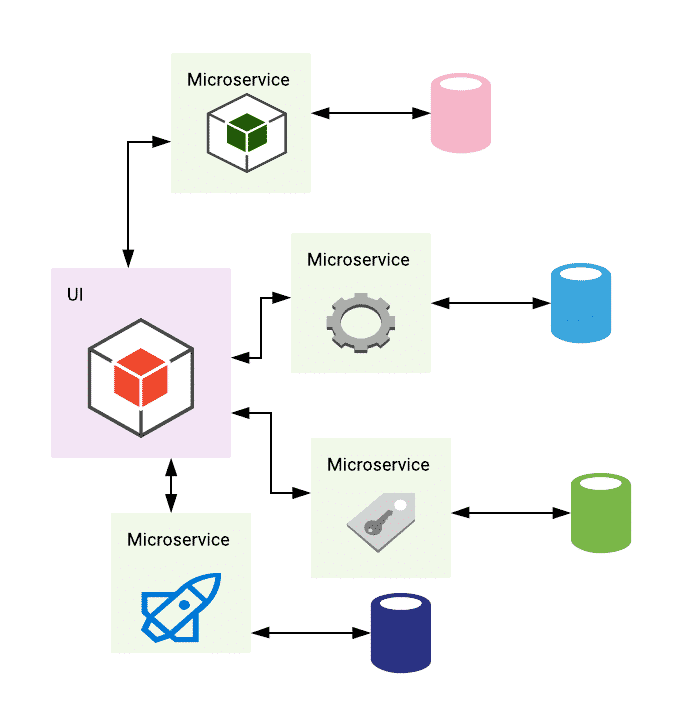

Micro services

Let’s imagine you’re using a site like Netflix.

Every time you perform some small action on the website, skipping forward, logging in, paying a bill, etc.

All of these functions are handled by different dedicated micro services – a concept that Netflix actually pioneered. All of them are held in virtual containers.

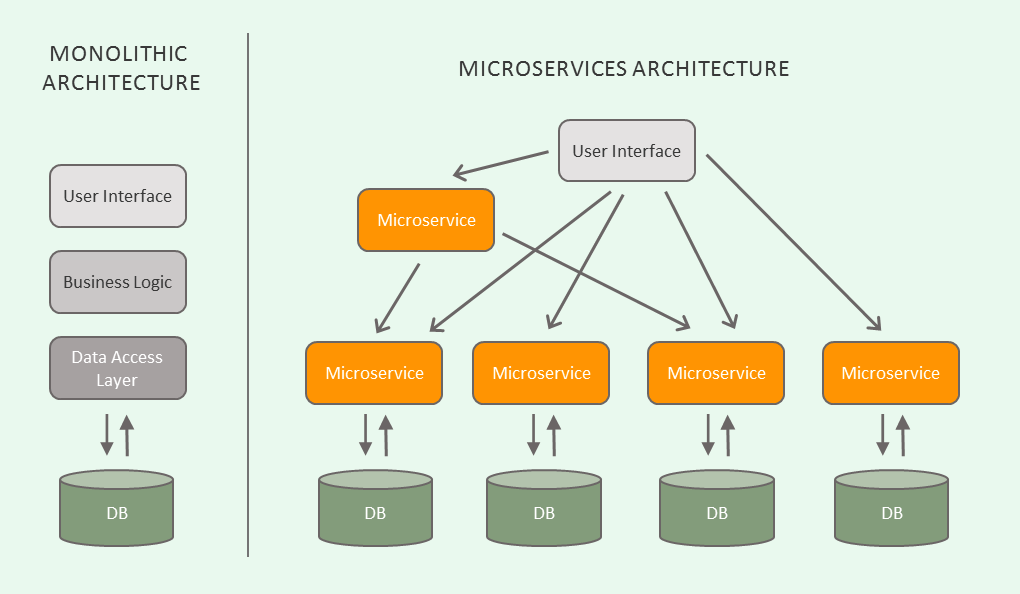

Old Method – Multiple Virtual Machines

The traditional way for a site to handle tons of users at once was to run lots of separate instances of the same code in virtual machines or VMs.

The basic idea is that a VM is a separate session of an operating system running inside another OS, and a typical server can run many VMs at once, which comes in handy when a site is being accessed by a large number of people.

Running a full VM and the associated software that you require typically requires millions of lines of code, and a user intending to complete a simple task may require the server to open up more full VMS, which is inefficient and can hog CPU cycles and other resources.

Microservices in containers

Microservices in containers, on the other hand, only contain the code for a single task. Instead of millions of lines of code, we could be talking about a few thousand.

Returning to our Netflix example:

You could have one container for credit card authentication, another for the review system, another for the volume slider, and so on.

So, if a large number of people use a specific micro-service, the system can simply create more instances of that specific container rather than having to open up more full-fat VMs.

Google runs about two billion containers at any given time because they’re so easy to scale, thanks in part to kubernetes, a system Google developed to manage containers automatically.

Kubernetes Advantages

While this may appear to be only of interest to network engineers, it actually has real benefits for, the home consumer.

If there is a problem with a service or the developers want to add a new feature, they don’t have to search through 10 million lines of code to find the problem and potentially break the entire thing in the process.

Instead, they can simply change one or two microservices while leaving the others alone, allowing fixes and new features to be pushed out more quickly and with less risk of causing other issues.

The container paradigm also improves speed because servers can run smaller microservices much more easily without being slowed down by several VMs. This has implications for back-end reliability as well, because dealing with a problematic container usually takes only a few seconds – the potential for lengthy periods of downtime is reduced.

Kubernetes in Action

All of this means that containers are being used for a wide range of applications, and games such as League of Legends and Fortnite rely heavily on them to reduce lag by reducing the load on their servers.

Banks are also using containers with Kubernetes to handle large amounts of transactions at once without slowing down, and even IBM Watson, which is heavily used in the healthcare industry, has switched to using containers rather than monolithic virtual machines.

Small containers have made life a lot easier; consider it the “Digital Tupperware” of the Internet.